Stop leaking your company secrets to ChatGPT

PromptGuard analyzes your prompts in real-time and automatically masks sensitive data before it's ever sent to an LLM.

Trusted by companies where security matters

Your organization is leaking sensitive data - without knowing it

API keys, tokens, and passwords are often pasted into prompts without a second thought. Once sent, they're outside your systems - and outside your control.

Project names, client info, or legal notes get casually dropped into LLMs just to move faster or get help - without any filter.

Most companies have zero visibility into what their teams are actually sending to AI tools. They don't know what's leaked, when, or by whom.

Your prompts, protected from the inside

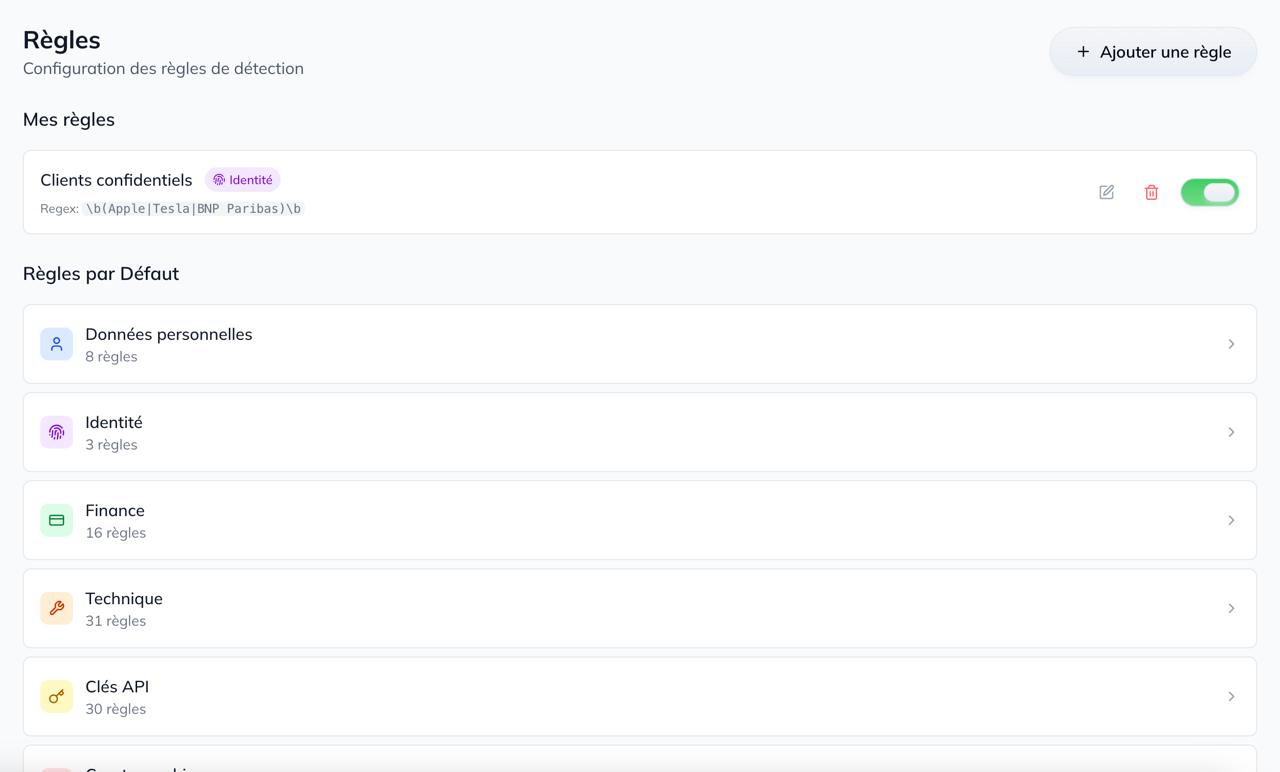

It watches what matters, hides what shouldn't be shared, and helps your team stay in control.

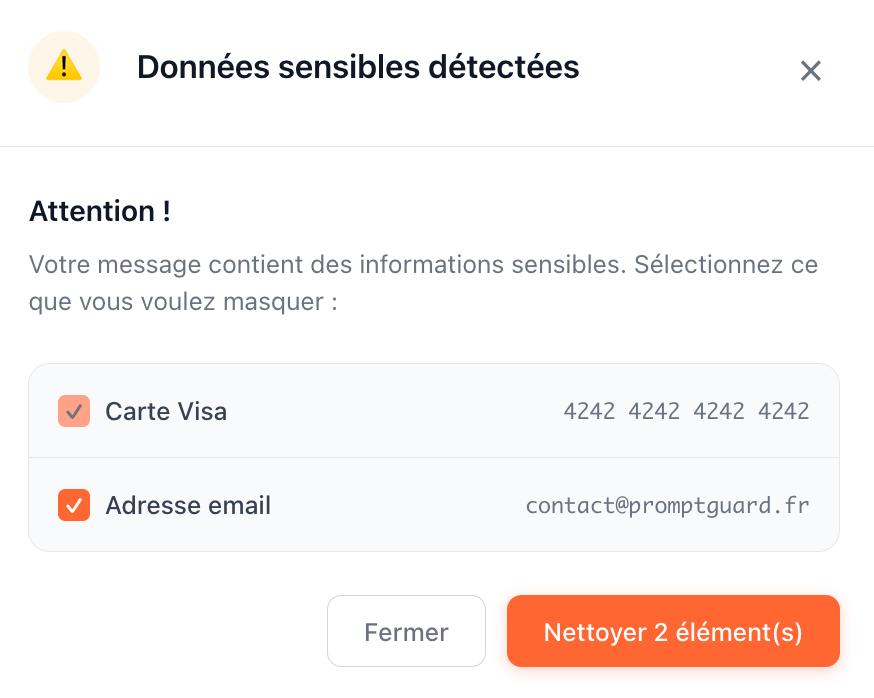

Real-time detection, automatic masking

PromptGuard analyzes your prompt as you type and instantly detects sensitive data like API keys, emails or secrets. It automatically masks them before they're ever sent to a LLM.

Nothing is sent to our servers

PromptGuard works 100% locally in your browser: your prompts and sensitive data are never sent, stored, or logged on our servers. Everything stays on your machine, ensuring total privacy.

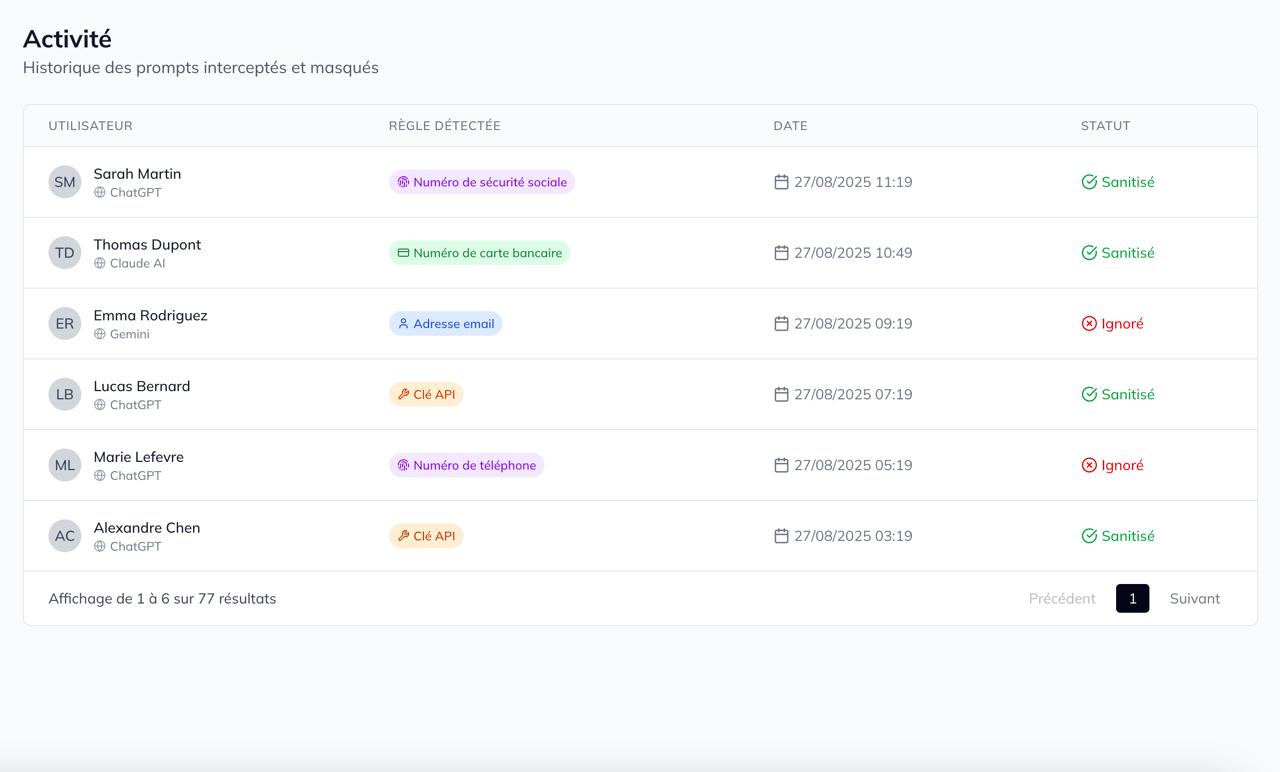

A dashboard to detect leaks & educate your team

Track detection activity across your team, get alerted when risky data is masked, and help teammates adopt safer AI practices - without blocking usage.

Never leak sensitive data to LLMs anymore

We have all the answers

Everything you need to know about PromptGuard and how it protects your sensitive data.

Start protecting your prompts today

Deploy PromptGuard in minutes and keep sensitive data from ever leaking to an LLM.